Last updated on July 22, 2022

In collaboration with Ryan Saxe and Bobby Mills

No one: ………..

Us: Yes, of course we can build you something to predict how many wins your draft deck is going to get!

As far as I know, this is the first time anyone has ever created a tool to help you “predict” how good a Magic deck will be. And especially the first time anyone has applied artificial intelligence and machine learning to such a task.

This is an amazing technical achievement, and hopefully the first of many similar features to come from Draftsim and our MTGA tracker and assistant, Arena Tutor.

For years I've had people ask me if there was a way to “score” their drafts on Draftsim. I think we've finally achieved that, and more. This statistic is more meaningful compared to any simulated draft score I could create because you are comparing yourself to other humans instead of to bots doing simulated drafts.

First things first, if you don't care about why this is awesome and how it works, and you just want to try the tool out, here's the link. And here's the link to Arena Tutor so you can have the functionality while you play Arena.

Download Arena Tutor to get predictions for your MTGA decks as soon as you finish building them.

Background and Methodology

This project is a collaboration between Arena Tutor (my developer/data scientist Bobby Mills and me) and limited writer and data scientist extraordinaire, Ryan Saxe. You may recall this isn't the first time we've worked with Ryan. He wrote an amazing article for us last year, called “Bot Drafting the Hard Way.”

Instead of creating bots that made pick decisions with a deference to archetypes, this time we wanted to see if there was a way to assess the overall quality of a deck after the draft.

While before we only had the draft decks from Draftsim's draft simulator to use as a dataset, now we've got thousands of match results from Arena Tutor. Now we can actually determine what types of decks have better outcomes than others.

To start with, we analyzed over 200,000 matches from Zendikar Rising limited across MTGA.

To ensure we had enough data, we combined and normalized match results across both best of one and best of three drafts.

Ryan then took this data and created a neural network that looked at how the individual cards contributed to an overall “deck.”

The goal was to maximize the likelihood that the associated probability distribution of outcomes for the deck would be close to the expected number of wins for that deck.

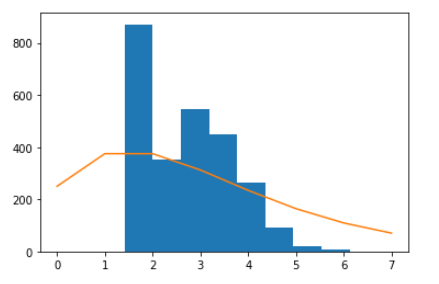

A graphic illustrating the distribution of wins given a 50% win rate (in orange) vs the distribution of win predictions from the model (blue).

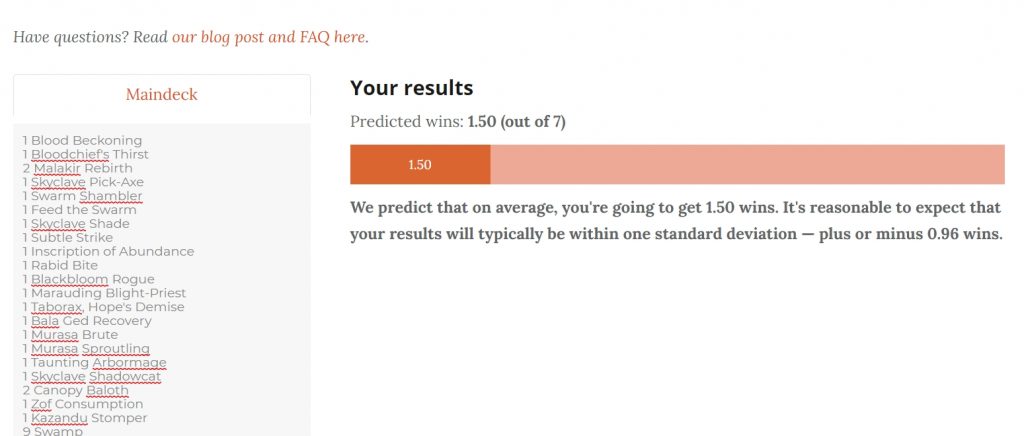

That process gave us the baseline we needed to say that the “prediction” is essentially the average number of wins that a deck like yours would get.

Model and Prediction Accuracy

And now I'll toss it to Ryan to give his mission statement for the model he created.

Understanding what makes a deck good or bad is one of the skills that separates the good from the great players. If you show a great player a draft deck, they could tell you how many wins they expect that deck to get. Given variance in the game, they can't always be spot on. However, it'll be rare for a good player to be off by more than two wins.

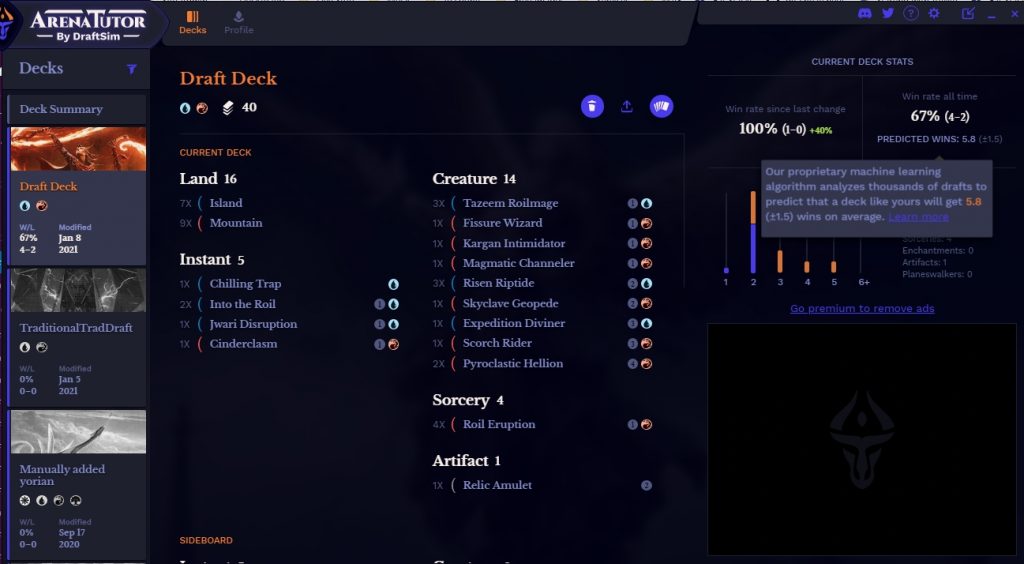

I set out to devise a comparably good machine learning algorithm for predicting the number of wins for a draft on Arena. This machine learning algorithm learns a Gaussian distribution. It learns both the average number of expected wins, and the variance of that predicted average to describe the probability of getting any number of wins in a draft on Arena.

Devising this model came with some challenges. How do you get the model to ever predict 6 or 7 wins when those quantities are rarer than 2 or 3 wins? Since decks only get played in one league, how can you learn a reasonable standard deviation without extra data points for that deck?

While I can't get into it here, we have some very clever solutions to these problems, and our initial tests of this beta version show promise. The learned standard deviations are not huge (often around 1 win) and appropriately bound model error (it is extremely rare for the model to predict a mean that is not within two standard deviations of the true prediction).

What this means is that the model may say “you're going to get 5 wins”, but instead you get 3 or 4, that can happen. But, just as I said at the beginning, that happens with human experts too!

Ryan Saxe

Clarifying even further that the upper bounds are very rare, Ryan said,

For example, your deck needs to be INSANE if you want it to give you an expected number of wins of 5+.

And this is a product of how much data we have. So please try out Arena Tutor so we can get more! I think you'll love it. As it stands, this is the v0, proof of concept version of the win predictor. It'll only get better.

Try it Out Yourself

OK now the really exciting part – I'm going to show you how to test it out yourself.

I know we're on the verge of Kaldheim coming out, but don't worry — as soon as we have enough data we'll be adding that set to the win predictor as well.

As I mentioned above, there are two places that you can find this model — on the website and inside Arena Tutor.

On Draftsim's Website

If you don't have a decklist handy, the easiest way is probably to generate a simulated ZNR sealed pool on Draftsim. Then click the magic wand to autobuild a deck.

I heavily recommend editing the deck a bit because the 40 cards in the suggested deck will be very simplistic and don't account for MDFCs, synergy etc.

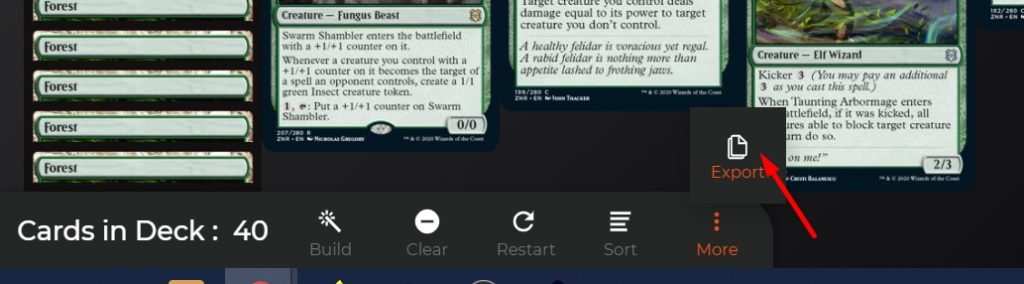

After that, export your deck using the button in the “More” menu on the toolbar.

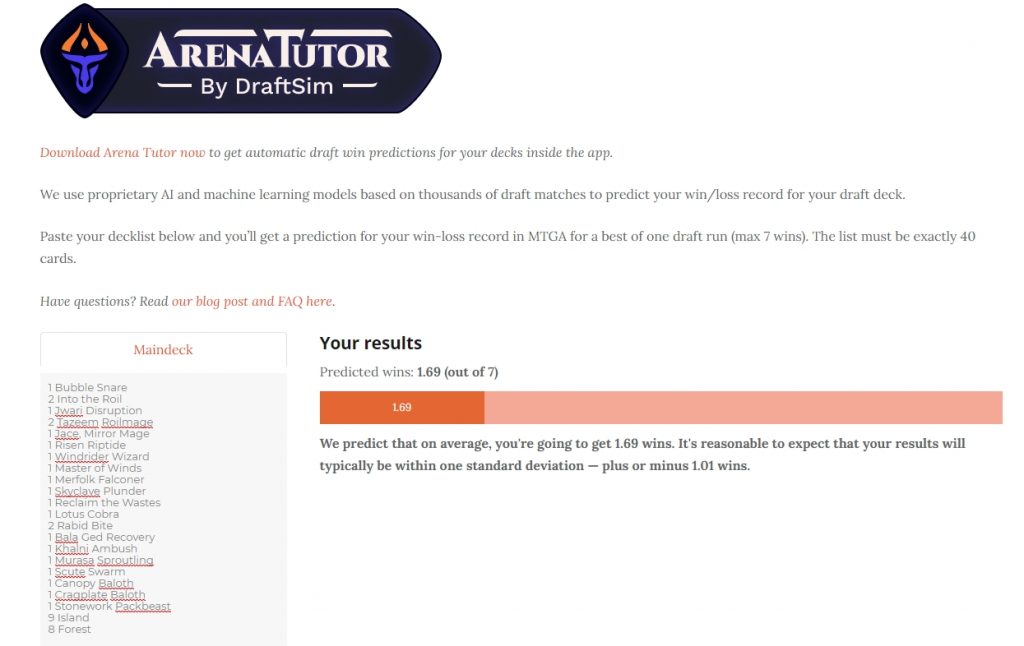

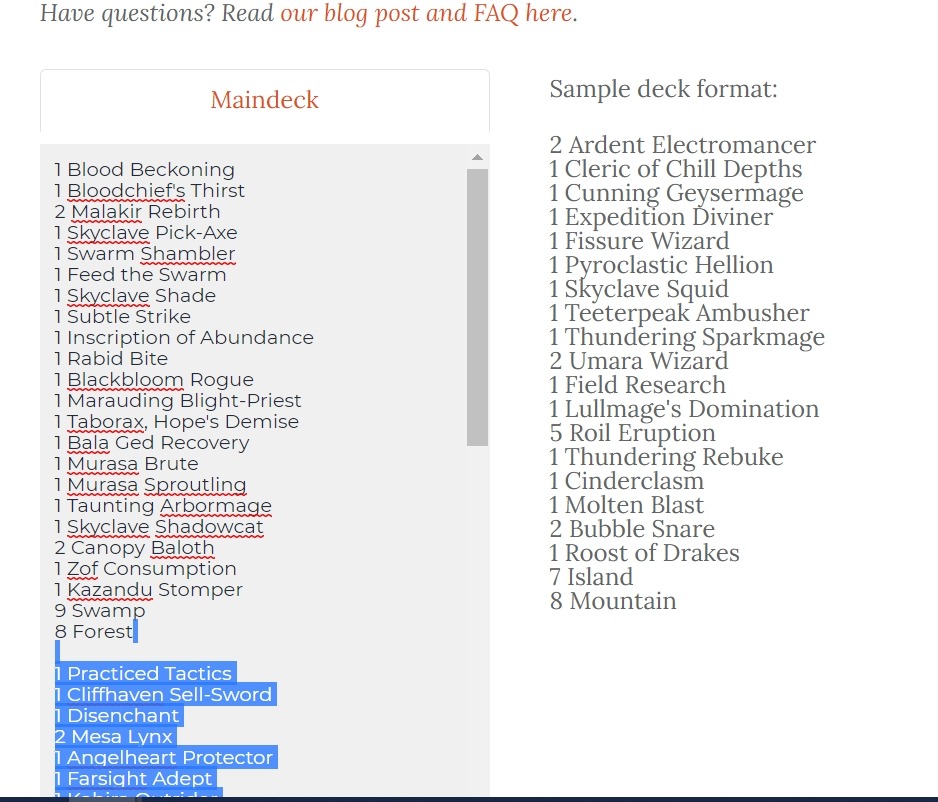

Then head over to the web app and paste your decklist into the “Maindeck” text box.

Be sure to delete the sideboard and extra line that are copied from the list — the deck must be exactly 40 cards for the prediction to work.

Click the “Predict” button and you're good to go!

What are some other ways you could get a couple draft decks to test this out?

- Export your current or saved draft decks directly out of MTGA

- Grab one of your past Magic Online draft decks out of your

user\Documentsfolder - Copy a deck from one of your previous drafts directly using the button in Arena Tutor

- Manually type in the list from a physical draft deck you have laying around or from one of the many, many samples on our Twitter account Limited Decklists

The win predictor will work for any type of ZNR draft deck — you just need to format it properly!

Inside Arena Tutor

Of course, inside Arena Tutor, this process is seamless. Just save a new draft deck in MTGA and you'll automatically see the prediction come up on the lobby screen next to the deck's record.

Limitations, Caveats, Etc

I think you should first and foremost think of this app as a fun way to see how well you drafted compared to the average. It is certainly not an end-all-be-all, “you're going to get exactly this many wins” prediction oracle.

The result you get from the app is a mean. So that means that your deck will get that result on average, based on what we have in our dataset. That's very specific.

If you're an above average player, great. And if you're below average… well, yeah.

We've all drafted those decks that should get us arrested for a “precrime”…

And even if you consider the “range” that the app gives you — this is still only one standard deviation away. The match results are normally distributed (a bell curve), so that means that only 68% of results are captured within that range. It's still very possible to end up with results outside that range.

Finally, as I mentioned, we have limited data, which constrains the prediction accuracy a bit. As I'm writing this, we only had the data to generate a model for Zendikar Rising, however we're planning on adding this prediction functionality for all future sets — as soon as we have enough match results for them.

Frequently Asked Questions

We could write a book about this model, but here are some answers to things you might be wondering about the new application.

Hey! I got fewer wins than it said I would. I feel disappointed.

I'm sorry that happened. But remember, the prediction is just an average, and as we explained above, it's just as likely you'll fall below the average as above it.

Hey! This model is stupid. I got more wins than it said I would.

Congrats, you have achieved godlike drafter status!

Why does it only take exactly 40 cards?

The way the model is designed, everything changes when you add additional cards. Since the majority of sample data is overwhelmingly 40 card decks, we had to work with what we were given.

Can I get a best of three prediction instead?

I could see doing this in the future, but right now we don't have enough data. I love best of 3 draft (and since I'm an elitist Boomer, I still think it's the only way to play “real” Magic). But since BO1 is faster, is the only ranked format, and is put front-and-center in the MTGA user interface, there is way more data.

If you really want a prediction for a BO3 deck, I recommend just copying it into the win predictor web front end and getting a rough idea from the BO1 prediction.

I got a <bad response> error

The model is only trained on real decks that people played. So it's possible that there's not enough data to make a prediction for the deck you submitted.

But also make sure that you used exactly 40 cards, cards from Zendikar Rising, and clicked the captcha button.

We Want Your Feedback

Is there such a thing as MTG free will anymore?? Why even bother trying to change your future?

This is just the first version of this model, so we are definitely expecting it to have some kinks and opportunities for improvement. So give it a try and please let us know what you think.

Were the predictions you got good? What do you think we could improve? What other similar things do you wish we would do?

Follow Draftsim for awesome articles and set updates:

2 Comments

I’m sure you’ve got this before, but get predicted wins without lands or with only lands. Why do you think that is?

This is because of the (relatively) small sample size. There aren’t very many decks (probably zero) that anyone submitted with no lands or with all lands. So the model doesn’t have any analogous situations to rely on to know that a deck like that is unworkable.

Add Comment